Happiness is a 100%-coverage unit test suite.

— pmjones (@pmjones) November 20, 2014100% code coverage should never really be a goal. If you're practicing TDD, it should really just happen for small parts of your codebase, but realistically even the most astute TDD practitioners break the rules now and then. Striving to ensure 100% code coverage usually means you end up writing tests to meet that goal, rather than the actual goals of maintaining a test suite.

I feel pursuing 100% coverage in a PHP project is a particularly poor idea as our tooling generally only provides Line Coverage. Line coverage simply states if a line has been executed, which can naturally produce false positives, where the code contains conditionals or several statements on one line.

There are more reasonable coverage metrics to use to measure the quality of a test suite. Sebastian Bergmann and Derick Rethans are working hard on bringing some of these options to us, but for now we're limited to line coverage. Let's take a look at the a couple of different coverage types with a very contrived, but hopefully clear example.

function foo($a, $b)

{

$string = '';

if (1 === $a || 1 < $b) {

$string.= 'bar';

}

return $string;

}

/** @test */

function line_coverage()

{

$this->assertEquals('bar', foo(0, 1000));

}

This first test case covers all of the lines within the function, so we have

Line Coverage. It also gives us Function Coverage, as we've executed all of the

functions in the program. However, it should be quite clear that we haven't

sufficiently exercised the system under test. Our first improvement will give us

Branch Coverage, where each boolean expression in a control structure needs to

have been evaluated to both true and false throughout the test run.

/** @test */

function branch_coverage()

{

$this->assertEquals('', foo(0, 0));

}

Great, we've covered the two branches within the function. Our tests are hardly

exhaustive though. Our next aim could be Path Coverage, where every unique

combination of branches is exercised, but with only one control structure and

having met Branch Coverage, we already have Path Coverage. It looks like

Condition Coverage would give us some improvements. Condition Coverage asserts

that each boolean sub-expression has evaluated to both true and false. So in

this case, we'd need to be sure both 1 === $a and 1 < $b have both been

evaluated to both true and false. Given our existing tests, we're yet to have 1

=== $a evaluate to true.

function condition_coverage()

{

$this->assertEquals('', foo(1, 0));

}

Things are certainly starting to look better now, but even Condition Coverage wont necessarily mean you've sufficiently covered the code. I could go on covering more complex types of code coverage, but you get the idea and you can read up on that. Needless to say, they can be both hard to achieve and hard to measure.

Anyway, I saw this tweet pop up in my timeline and feeling a little bored, I sent a cheeky retort and then set myself a challenge, how quickly could I disprove that 100%? Turns out quite quickly. Within 12 minutes, I had found something that hadn't been sufficiently covered on Aura.Router and I had submitted a PR with an improved test.

I found the weakness in coverage using a mutation

testing tool. In brief,

mutation testing is the practice of changing something in the production code,

such as changing a literal value, and making sure the tests fail. If none of the

tests fail, there's a good chance the test coverage is insufficient. For

example, this diff shows a mutation where the literal value 0 has been changed

to 123.

- $score = isset($score[$type]) ? $score[$type] : 0;

+ $score = isset($score[$type]) ? $score[$type] : 123;

If the test suite for this project where to pass with such a modification, it would suggest that the tests are only covering the truthy outcome of the conditional.

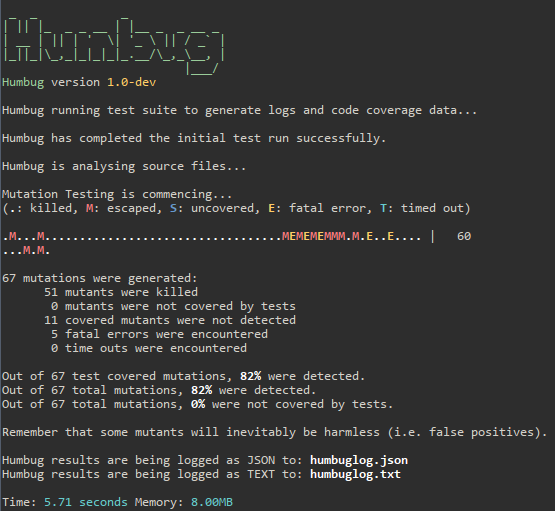

Just this week my friend Pádraic released a new mutation testing tool, humbug. As it was based on one of Paddy's earlier inventions that I have some experience with, I thought I'd check it out and see if it could find anything else in the Aura projects for me. After a little bit of hacking on it, I had humbug running on Aura.Router.

The most interesting information it showed was that 11 covered mutants where not detected by the test suite. That is, 11 mutations to the production code that was detected as being covered with line coverage, were not detected by the test suite.

A cursory glance at a couple of the 11 mutants suggested they might not be

interesting to pursue (there were quite a few that changed return $this; to

return null;), but the one at the top of the list caught my eye. It looked

like a complicated assignment and was also related to the code I sent the

previous pull request in for. The mutation is a simple one, replace a greater

than operator, with a greater than or equals.

1) Humbug\Mutator\ConditionalBoundary\GreaterThan

Diff on \Aura\Router\Router::match() in /home/davem/src/Aura.Router/src/Router.php:

--- Original

+++ New

@@ @@

$better_match = ! $this->failed_route

- || $route->score > $this->failed_route->score;

+ || $route->score >= $this->failed_route->score;

if ($better_match) {

$this->failed_route = $route;

}

}

$this->matched_route = false;

Looking further at the actual Aura code, it suggests that the closest match of a set of routes that failed to match exactly, all things being equal, is the route that was added to the router first. The mutation changes the production code, so that any subsequent failed matches, with a matching score as good as the previously closest match, becomes the new closest match.

The existing test suite wasn't testing the code with a sufficient range of values, such that the greater than operator was being tested at it's boundaries. Writing a test to cover this was fairly trivial, adding a couple of Routes that will create the same score but fail to match and asserting the first one that was added is considered the best match.

public function testGetFailedRouteIsBestMatchWithPriorityGivenToThoseAddedFirst()

{

$post_bar = $this->router->addPost('post_bar', '/bar');

$delete_bar = $this->router->addDelete('delete_bar', '/bar');

$route = $this->router->match('/bar', array());

$this->assertFalse($route);

$this->assertSame($post_bar, $this->router->getFailedRoute());

$this->assertEquals($post_bar->score, $delete_bar->score, "Assert scores were actually equal");

}

Another PR on it's way. This kind of coverage would fall under Parameter Value Coverage, whereby you ensure that code has been covered with all common possible values. It's a bit complicated with complex types, but with a router like this, those common values might be:

- Router with no routes

- Router with one non-matching route

- Router with one matching route

- Router with 2 matching routes

- Router with one matching and one non-matching route

- Router with two non-matching routes

- Router with two non-matching but equivalent scoring routes

- Router with a large amount of routes

- etc

I can't think of any way in which a tool could effectively supply a metric regarding Parameter Value Coverage with complex types. Primitives might be possible, but generally you'd be better following the principles of Property based testing, whereby you declare the properties and have the tool generate test cases.

Mutation testing is a great thing to have a grasp of in theory, but it's not particularly easy to practice. The tools are very hard to write and then their output is often hard to understand or interpret effectively. I wouldn't recommend practicing with mutation testing on a regular basis, but it's certainly worth considering on the odd occasion. Remember, tests shouldn't be considered valid tests until you've seen them fail at least once.

TLDR; Code coverage metrics are nice, but don't trust them without knowing their flaws.

I may have slightly picked on Paul Jones and the Aura project here, hopefully they'll take it in good faith. I targeted Aura because Paul tweeted about it and they have quite a few libraries with high line coverage. While I don't necessarily agree with some of their goals, their involvement in this post was merely circumstantial.